Baseline System

You can find more information about how to run and use the baseline models in this github repository.

All participants are supplied with two baselines:

-

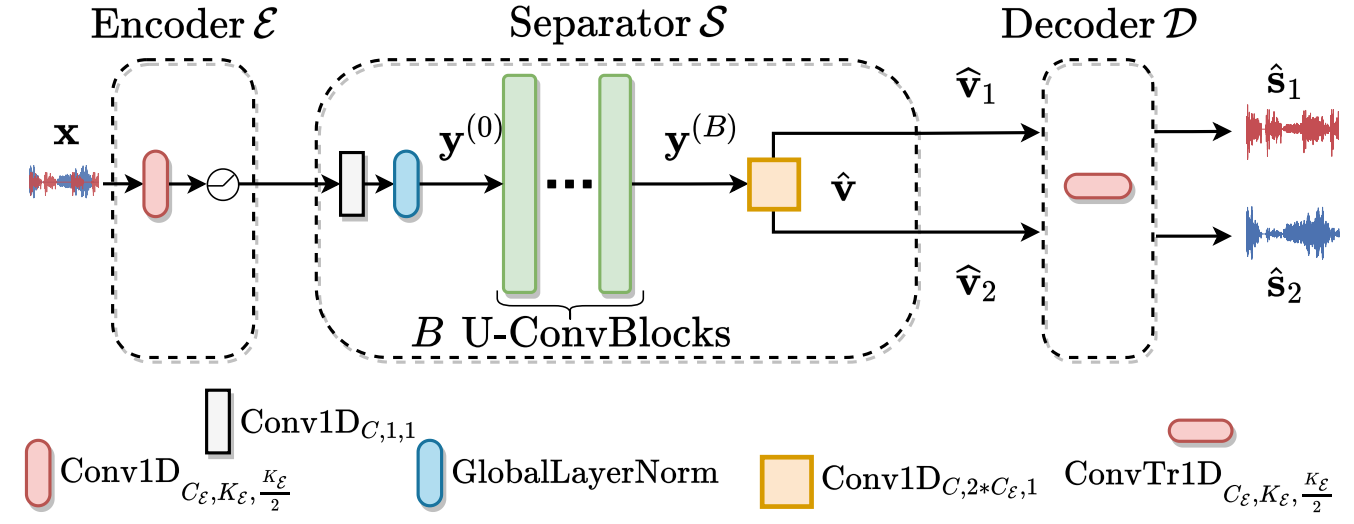

A) A fully-supervised baseline (Sudo rm -rf) trained on out-of-domain labeled data (the Libri3mix dataset, adapted to vary the number of speakers between 1 and 3) using an efficient mask-based convolutional model [1, 2].

-

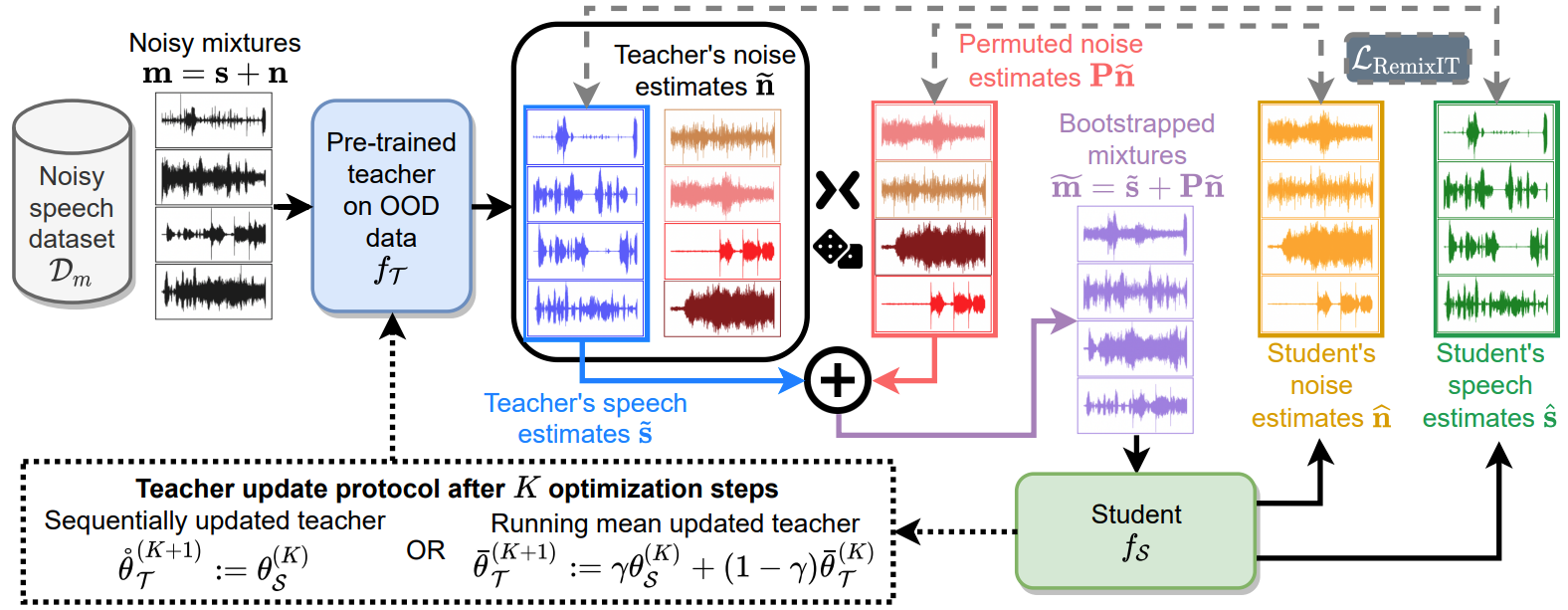

B) A self-supervised adaptation baseline model which is the student network in a RemixIT [3] teacher-student framework. RemixIT is a totally-unsupervised domain adaptation method for speech enhancement that does not need isolated in-domain speech or isolated noise waveforms, and uses the pre-trained Sudo rm -rf model from A) as teacher [3]. A schematic representation of the RemixIT algorithm is given below:

Fully-supervised Sudo rm -rf out-of-domain teacher

- The supervised Sudo rm -rf model has been trained on the out-of-domain (OOD) Libri3mix data using the available isolated clean speech and noise signals, where the proportion of 1-speaker, 2-speaker, and 3-speaker mixtures is set to 0.5, 0.25, and 0.25, respectively.

- The trained model has an encoder/decoder with 512 basis, 41 filter taps, a hop-size of 20 time-samples, and a depth of U = 8 U-ConvBlocks.

- We use as a loss function the negative scale invariant signal-to-noise ratio (SI-SNR) with equal weights on the speaker mix and the noise component.

Self-supervised RemixIT’s student

- The RemixIT network uses the pre-trained OOD supervised teacher and initializes exactly the same network as a student from the same checkpoint.

-

In a nutshell, RemixIT uses the mixtures from the CHiME-5 data in the following way:

- 1) it feeds a batch of CHiME-5 mixtures in the frozen teacher to get some estimated speech and estimated noise waveforms;

- 2) it permutes the teacher’s estimated noise waveforms across the batch dimension;

- 3) it synthesizes new bootstrapped mixtures by adding the initial speech teacher’s estimates with the permuted teacher’s noise estimates;

- 4) it trains the student model using as pseudo-targets the teacher’s estimates.

We use as loss function the negative scale-invariant signal-to-noise ratio (SI-SNR) with equal weights on both speech and noise pseudo-targets provided by the teacher network.

References

[1] Tzinis, E., Wang, Z., & Smaragdis, P. (2020, September). Sudo rm-rf: Efficient networks for universal audio source separation. In 2020 IEEE 30th International Workshop on Machine Learning for Signal Processing (MLSP). https://arxiv.org/abs/2007.06833

[2] Tzinis, E., Wang, Z., Jiang, X., and Smaragdis, P., Compute and memory efficient universal sound source separation. In Journal of Signal Processing Systems, vol. 9, no. 2, pp. 245–259, 2022, Springer. https://arxiv.org/pdf/2103.02644.pdf

[3] Tzinis, E., Adi, Y., Ithapu, V. K., Xu, B., Smaragdis, P., & Kumar, A. (October, 2022). RemixIT: Continual self-training of speech enhancement models via bootstrapped remixing. In IEEE Journal of Selected Topics in Signal Processing. https://arxiv.org/abs/2202.08862