Overview

CHiME-5 targets the problem of distant microphone conversational speech recognition in everyday home environments. Speech material has been collected from twenty real dinner parties that have taken place in real homes. The parties have been made using multiple 4-channel microphone arrays and have been fully transcribed. The challenge features:

- simultaneous recordings from multiple microphone arrays;

- real conversation, i.e. talkers speaking in a relaxed and unscripted fashion;

- a range of room acoustics from 20 different homes each with two or three separate recording areas;

- real domestic noise backgrounds, e.g., kitchen appliances, air conditioning, movement, etc.

The scenario

The dataset is made up of the recording of twenty separate dinner parties that are taking place in real homes. Each dinner party has four participants - two acting as hosts and two as guests. The party members are all friends who know each other well and who are instructed to behave naturally. Efforts have been taken to make the parties as natural as possible. The only constraints are that each party should last a minimum of 2 hours and should be composed of three phases, each corresponding to a different location:

- kitchen - preparing the meal in the kitchen area;

- dining - eating the meal in the dining area;

- living - a post-dinner period in a separate living room area.

The recording set up

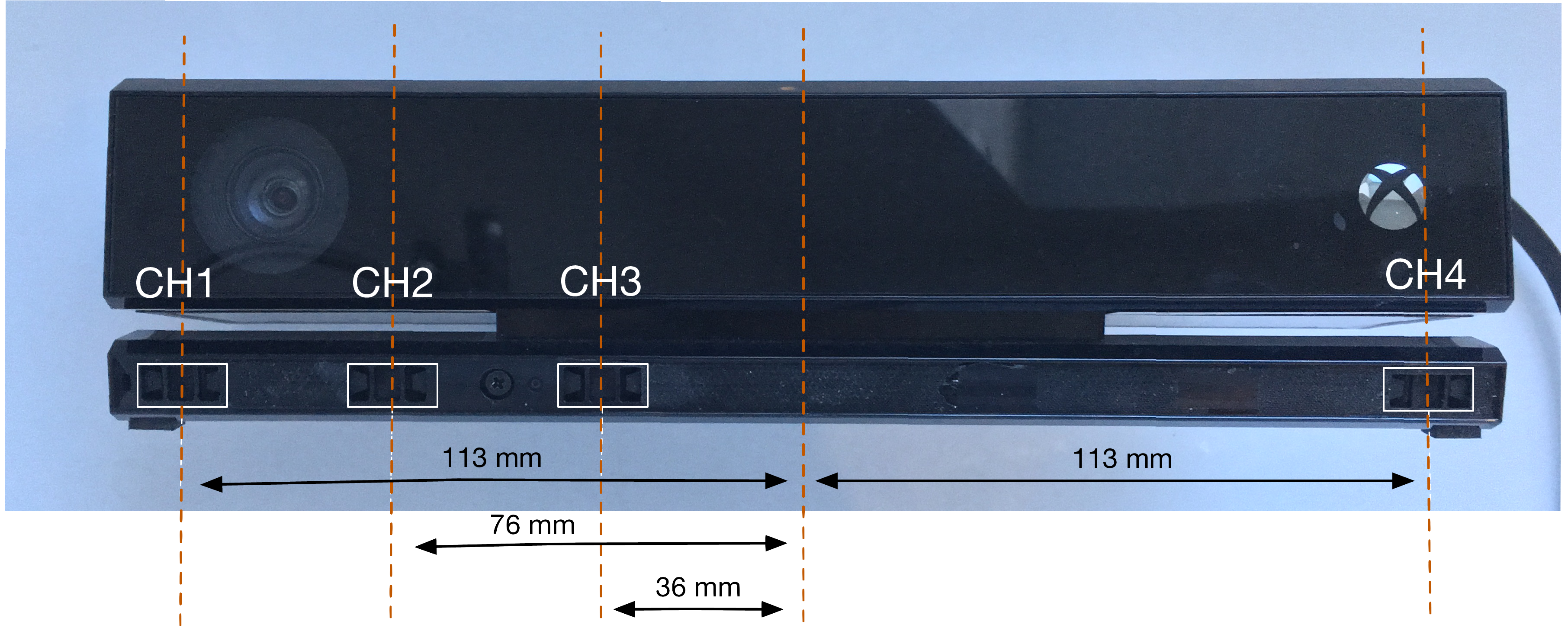

Each party has been recorded with a set of six Microsoft Kinect devices. The devices have been strategically placed such that there are always at least two capturing the activity in each location. Each Kinect device has a linear array of 4 sample-synchronised microphones and a camera. The microphone array geometry is illustrated in the figure below.